Exploring the legal boundaries of Synthetic Data

In recent years, big data has radically changed the way we live our lives, do business and conduct (scientific) research. This is reflected in the significant rise in demand for large amounts of (personal) data. Strict privacy legislation has reinforced this development, due to the fact that it is widely regarded as the main obstacle for (free) data sharing. Synthetic Data aims to offer a solution to this problem by utilizing AI to generate new, irreducible datasets that replicate the statistical correlations of real-world data. But how anonymous are these data? And can the safeguards of the General Data Protection Regulation (GDPR) truly be circumvented by using this method? In this write-up, we will consider the legal aspects of synthetic data and whether or not they are truly a blessing in disguise for the future of data sharing and, more importantly, our privacy.

What is synthetic data?

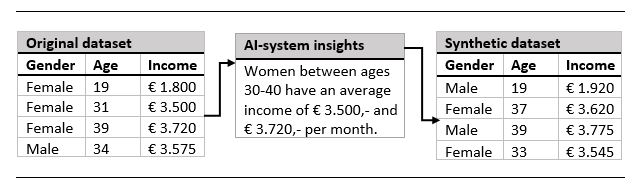

Synthetic data are artificially created data that contain many of the correlations and insights of the original dataset, without directly replicating any of the individual entries. This way, data subjects should no longer be identifiable within the new dataset.

Example of a use case for synthetic data.

Anonymisation versus pseudonymisation

Advocates of synthetic data argue that the primary benefit of synthetic data can be found in recital 29 of the GDRP, which states that the principles of data protection do not apply to personal data that have been rendered into anonymous data in such a manner that the data subject is no longer identifiable.

Be that as it may, it is still unclear whether synthetic data can actually be regarded as anonymized data. The decisive factor in these conflicting points of view is the likelihood of whether or not the controller or a third party will be able to identify any individual within the dataset, by using all reasonable measures. If this is feasible, the process should be qualified as pseudonymisation, to which the GDPR still applies.

To assess whether synthetic data can be qualified as anonymized or pseudonymized data, we need to assess the robustness of the technique that is used. To do that, The Article 29 Data Protection Working Party (WP29) provides us with three criteria that elaborate on the aptitude of an anonymisation method (i.e. generating synthetic data).[1]

- Singling out: the possibility to distinguish and identify certain individuals within the dataset.

- Linkability: the ability to link two or more datapoints concerning the same data subject within one or more different datasets.

- Inference: the possibility to deduce, with significant probability, the value of an attribute from a data subject by using the values given to other attributes within the dataset.

Whether or not synthetic datasets meet the aforementioned criteria will depend on the extent to which the synthetic datapoints will deviate from the original data. In most cases, adding ‘noise’ will not be enough: the AI-framework needs to have a system in place that actively monitors whether ample distance is kept between the newly generated datapoints and the original ones. Even then, it is highly recommended to maintain the possibility for human intervention if and when something goes awry.

With all those measures in place, there is an argument to be made to classify synthetic data as anonymous data. In that case, the GDPR will not apply to the (further) processing of synthetic data.

Not completely exempt from the GDPR

However, this does not mean that the safeguards of the GDPR can be completely circumvented by simply utilizing a synthetic dataset. The anonymisation process (i.e. generating the synthetic dataset) inherently qualifies as the processing of personal data, to which the GDPR still applies. Thus, the controller still needs a lawful basis for generating synthetic data, as well as a purpose that is compatible with the initial purpose for which the data have been collected. In most cases, the latter should not pose a problem: the WP29 is of the opinion that anonymisation as an instance of further processing can be considered compatible with the original purpose of processing, on the condition that the modus operandi meets the criteria mentioned above.[2]

Things to keep in mind

Generating synthetic data has a lot of potential, as long as the process is implemented correctly. If you are considering to utilize synthetic data, please take note of the following points:

- Evaluate whether synthetic data offers a suitable solution for the specific needs of your business or organization. The main advantage of synthetic data lies in their ability to preserve the statistical properties of the original dataset. This attribute provides a lot of utility in certain use cases, such as compute learning and (scientific) research.

- Assess whether the synthetic datasets deviate enough from the original datasets and adjust the settings of the AI-systems accordingly. In doing so, pay attention to the criteria as laid down by the WP29.

- Give thought to your obligations under the GDPR. List your lawful basis for processing, as well as the purpose for which this is done.

If you have any questions about the legal aspects of synthetic data and whether or not this anonymisation technique will be suitable for your organization, please do not hesitate to contact one of the experts at BG.Legal.

[1] WP29, Opinion 05/2014 on Anonymisation Techniques (WP 216), 10th of April 2014, p. 11 and 12.

[2] WP29, Opinion 05/2014 on Anonymisation Techniques (WP 216), 10th of April 2014, p. 7.